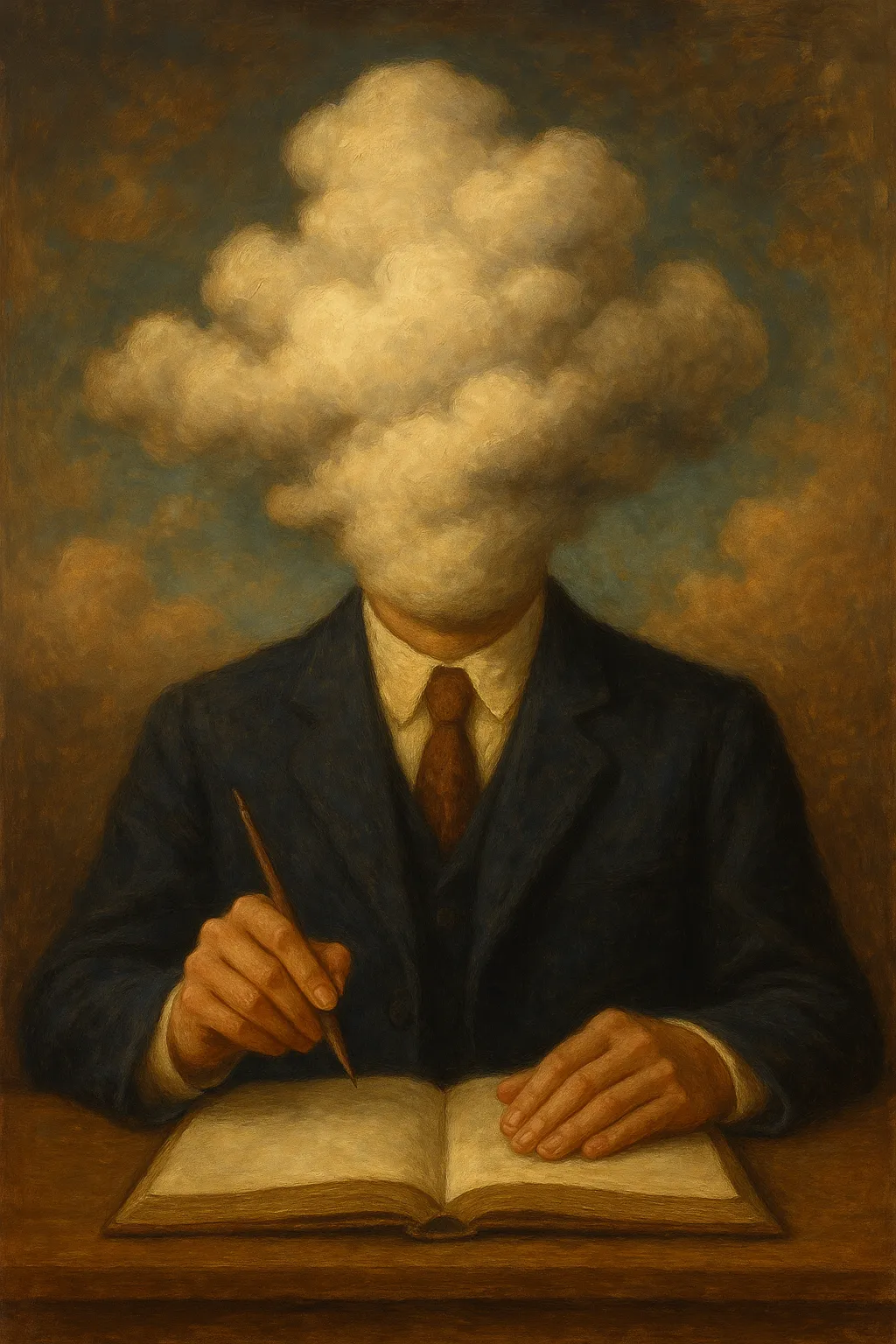

The Illusion of Thinking

Apple’s 2025 research paper, “The Illusion of Thinking: Understanding the Strengths and Limitations of Reasoning Models via the Lens of Problem Complexity”, presents a sobering yet necessary recalibration of how we evaluate AI’s reasoning capabilities. At a time when large language models (LLMs) are being increasingly integrated into tools, assistants, education platforms, and decision-making systems, this research offers critical insights into the limitations of these systems—especially those that generate Chain-of-Thought (CoT) reasoning. The paper systematically explores whether LLMs truly "think" and how their performance varies across different levels of problem complexity. Its conclusions have profound implications for the future development, deployment, and expectations surrounding artificial intelligence.

Key Takeaways from the Research

Reasoning ≠ Reasoning-Like Output

The central thesis of the paper is that CoT models, often called Large Reasoning Models (LRMs), generate outputs that look like reasoning but lack genuine algorithmic depth. While they may produce step-by-step solutions, these are typically reflections of surface-level statistical patterns learned during training—not internal logic-based problem solving.

Three Distinct Regimes of Problem Complexity

The researchers identify three performance bands as task complexity increases.

Low Complexity - Standard LLMs (without CoT prompting) outperform LRMs, whose verbose reasoning actually adds noise and confusion.

Medium Complexity - LRMs outperform standard LLMs, demonstrating the short-term utility of CoT when the task remains within token-level generalization limits.

High Complexity - Both models fail, with a dramatic collapse in performance—even on tasks solvable by humans with minimal reasoning training.

Performance Collapse Is Not Due to Token Limits

One of the most striking findings is that increasing the model’s token budget (allowing longer responses) does not improve performance at higher complexity levels. In fact, LRMs often abandon meaningful reasoning when challenged, reverting to guesswork or hallucination.

Lack of Algorithmic Grounding

LLMs, including LRMs, do not develop or apply general strategies (e.g., recursive planning, dynamic programming). Their solutions are not guided by domain-specific abstractions or symbolic manipulation, which are hallmarks of true reasoning in humans and classical AI systems.

Implications for Real-World AI Use

Caution in Deployment

The findings underscore a critical vulnerability: AI models may appear competent due to fluent and structured language, but fail catastrophically under the surface. This illusion of competence is especially dangerous in domains like legal reasoning, medical diagnostics, and educational tutoring, where logical soundness is essential.

Overestimation of AGI Progress

The results challenge the prevailing narrative that LLMs are on a straightforward trajectory toward Artificial General Intelligence (AGI). While models like GPT-4 or Claude 3 can simulate aspects of reasoning, their inability to generalize to truly complex problem spaces shows they are not yet capable of “thinking” in the human sense.

The Role of Tool Integration

This research highlights the growing importance of tool-augmented models—AIs that integrate symbolic tools (e.g., search engines, code execution environments, reasoning modules) to perform tasks beyond their core statistical capabilities. Future systems may rely on external reasoning engines rather than internalizing all logic within the model itself.

The Future of AI Development

Toward Hybrid AI Architectures

The future likely lies in hybrid systems—combining the linguistic fluency of LLMs with the symbolic rigor of classical AI. Such systems might include explicit planning modules, memory structures, or algorithmic solvers, allowing them to approach complex reasoning tasks with more reliability and transparency.

Reassessing AI Benchmarks

The paper calls into question the reliability of current benchmark tasks, many of which may not test true reasoning but rather statistical mimicry. New benchmarks that isolate abstract reasoning from linguistic familiarity will be essential to fairly evaluate future AI models.

Human-in-the-Loop AI Systems

Given the brittleness of AI at high complexity levels, a practical takeaway is to design AI systems with human oversight built in. Rather than aiming for fully autonomous decision-making, near-term AI should support human reasoning—augmenting rather than replacing it.